Build an MCP Server which answers questions with Retrieval Augmented Generation

Last edited 133 days ago

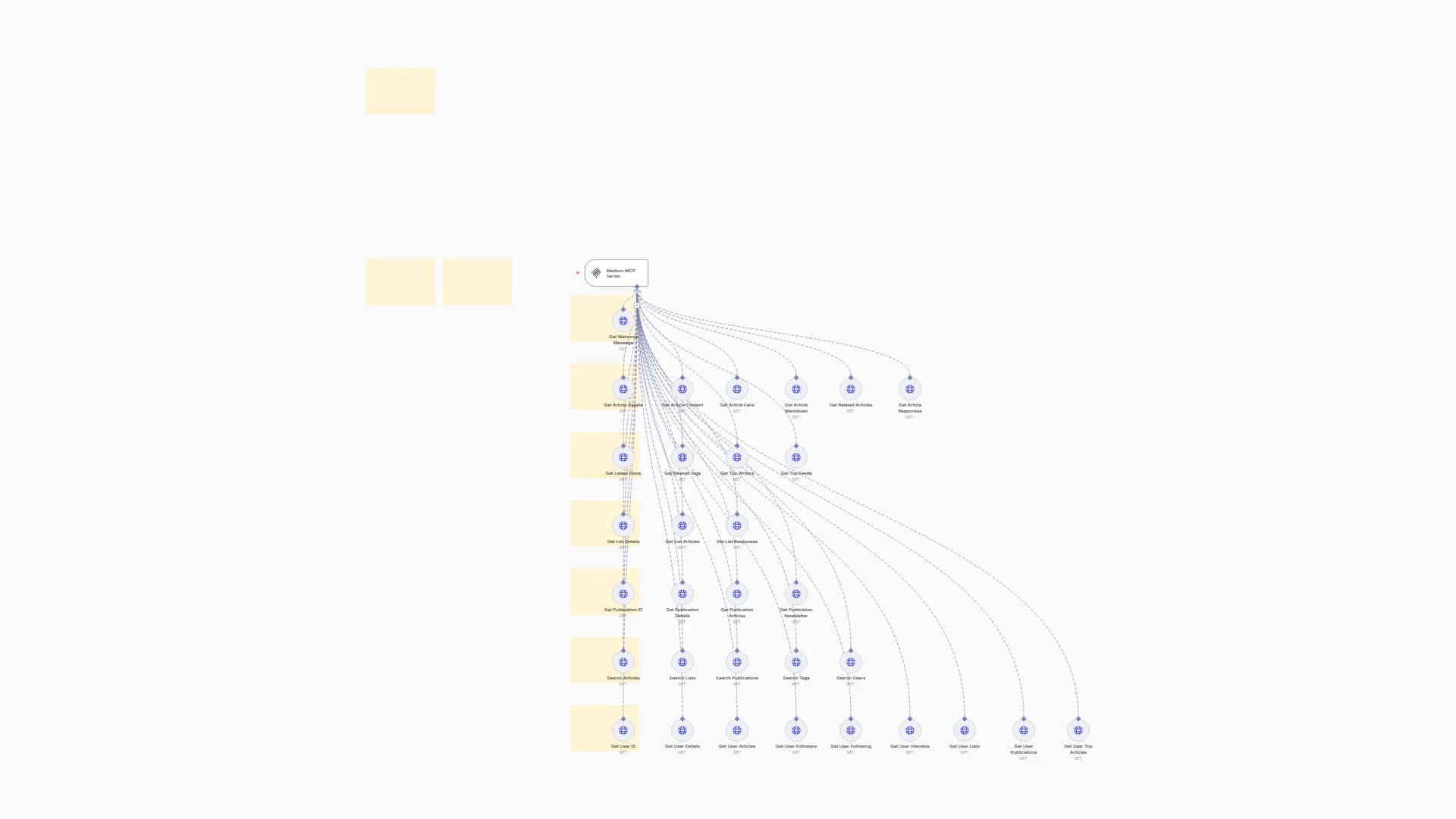

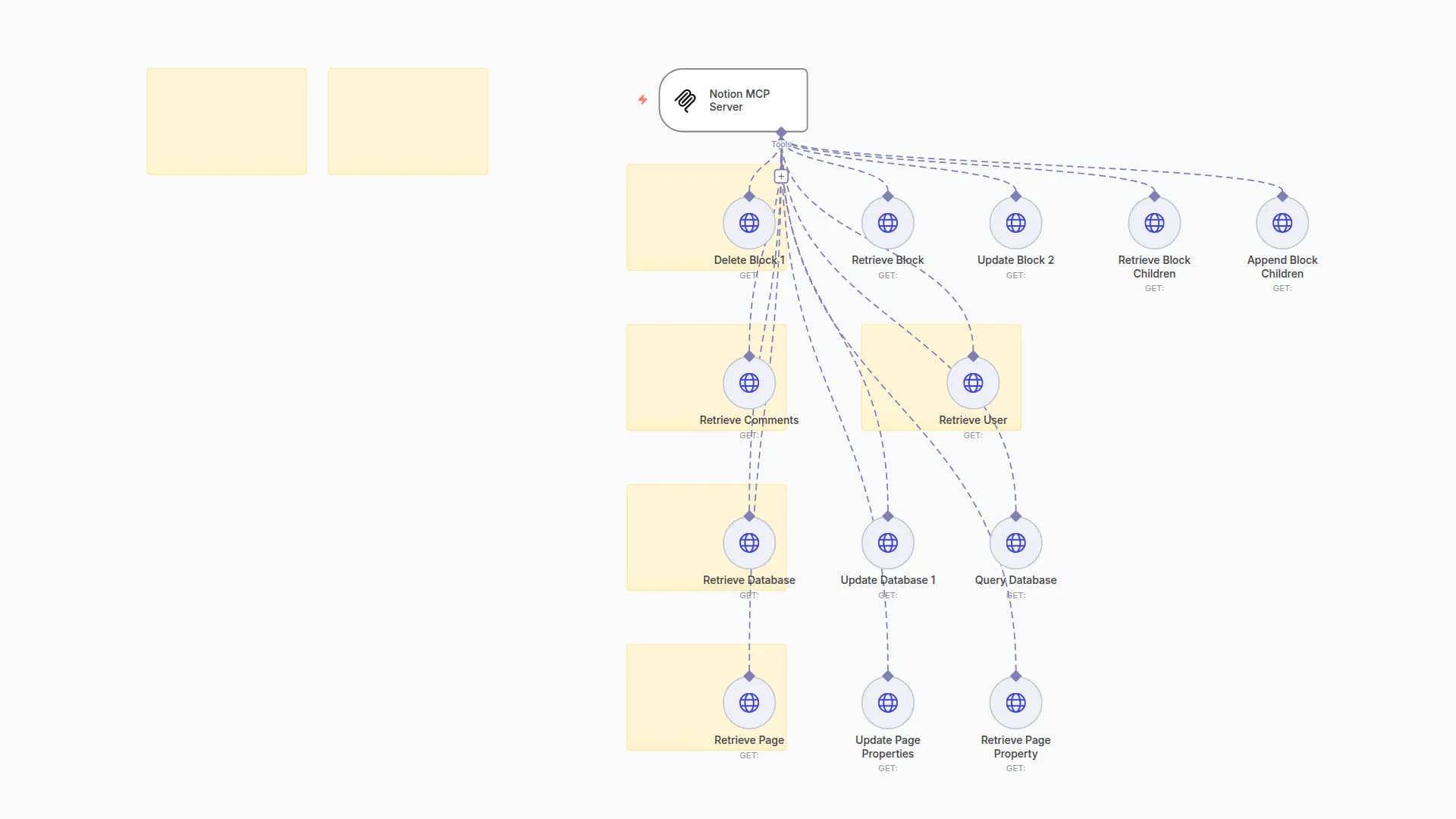

Build an MCP Server which has access to a semantic database to perform Retrieval Augmented Generation (RAG)

Tutorial

Click here to watch the full tutorial on YouTube

How it works

This MCP Server has access to a local semantic database (Qdrant) and answers questions being asked to the MCP Client.

AI Agent Template

Click here to navigate to the AI Agent n8n workflow which uses this MCP server

Warning

This flow only runs local and cannot be executed on the n8n cloud platform because of the MCP Client Community Node.

Installation

-

Install n8n + Ollama + Qdrant using the Self-hosted AI starter kit

-

Make sure to install Llama 3.2 and mxbai-embed-large as embeddings model.

-

Activate the n8n flow

- Run the "RAG Ingestion Pipeline" and upload some PDF documents

How to use it

- Run the MCP Client workflow and ask a question. It will be either answered by using the semantic database or the search engine API.

More detailed instructions

Missed a step? Find more detailed instructions here: https://brightdata.com/blog/ai/news-feed-n8n-openai-bright-data

You may also like

New to n8n?

Need help building new n8n workflows? Process automation for you or your company will save you time and money, and it's completely free!