Daily Postgres Table Backup to GitHub in CSV Format

Last edited 115 days ago

This workflow automatically backs up all public Postgres tables into a GitHub repository as CSV files every 24 hours.

It ensures your database snapshots are always up to date updating existing files if data changes, or creating new backups for new tables.

How it works:

- Schedule Trigger – Runs daily to start the backup process.

- GitHub Integration – Lists existing files in the target repo to avoid duplicates.

- Postgres Query – Fetches all table names from the

publicschema. - Data Extraction – Selects all rows from each table.

- Convert to CSV – Saves table data as CSV files.

- Conditional Upload –

- If the table already exists in GitHub → Update the file.

- If new → Upload a new file.

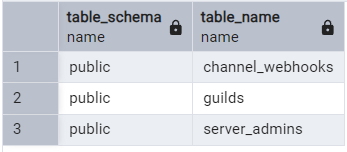

Postgres Tables Preview

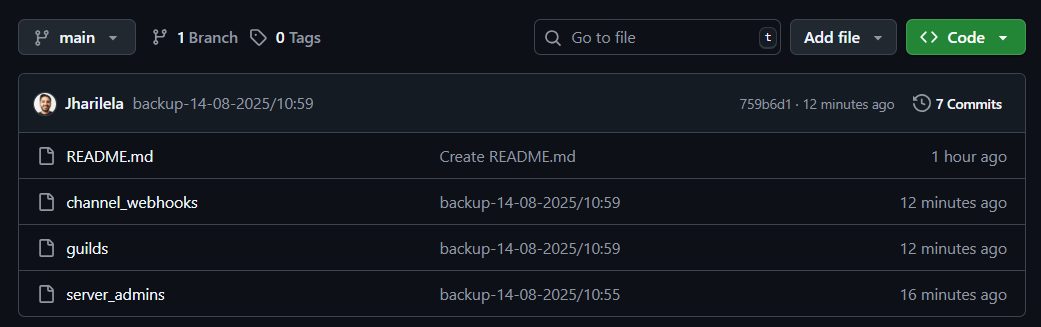

GitHub Backup Preview

Use case:

Perfect for developers, analysts, or data engineers who want daily automated backups of Postgres data without manual exports keeping both history and version control in GitHub.

Requirements:

- Postgres credentials with read access.

- GitHub repository (OAuth2 connected in n8n).

You may also like

New to n8n?

Need help building new n8n workflows? Process automation for you or your company will save you time and money, and it's completely free!