Prevent Prompt Injection Attacks with a GPT-4O Security Defense System

Last edited 115 days ago

AI Security Pipeline - Prompt Injection Defense System using GPT-4O

Protect your AI workflows from prompt injection attacks, XSS attempts, and malicious content with this multi-layer security sanitization system.

Important: The n8n workflow template uploader did not allow me to upload the complete system prompt for the Input Validation & Pattern Detection. Copy the complete System Prompt from here

What it does

This workflow acts as a security shield for AI-powered automations, preventing indirect prompt injection and other threats. It processes content through a multi-layered defense pipeline that detects malicious patterns, sanitizes markdown, validates URLs, and provides comprehensive security assessments.

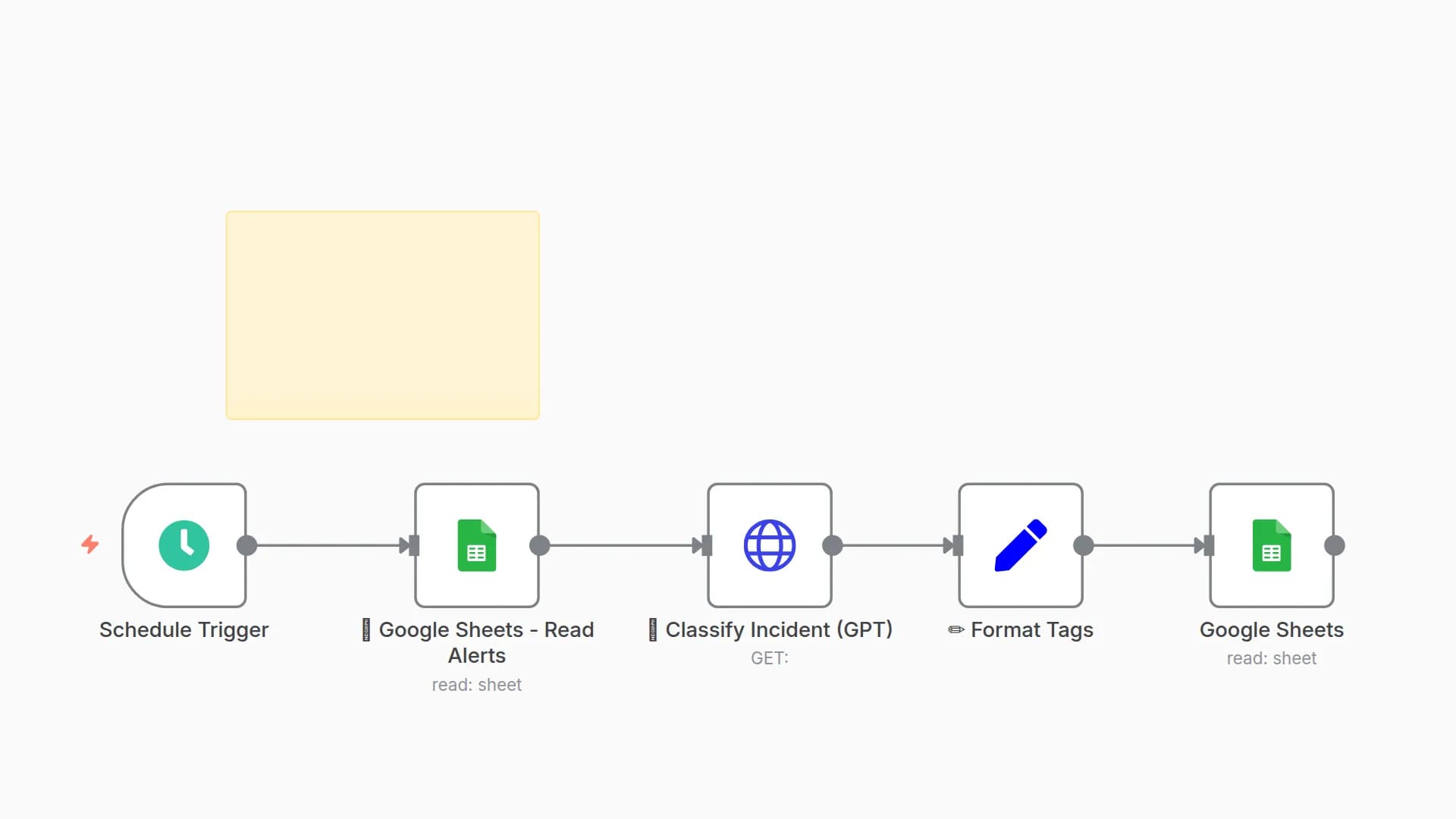

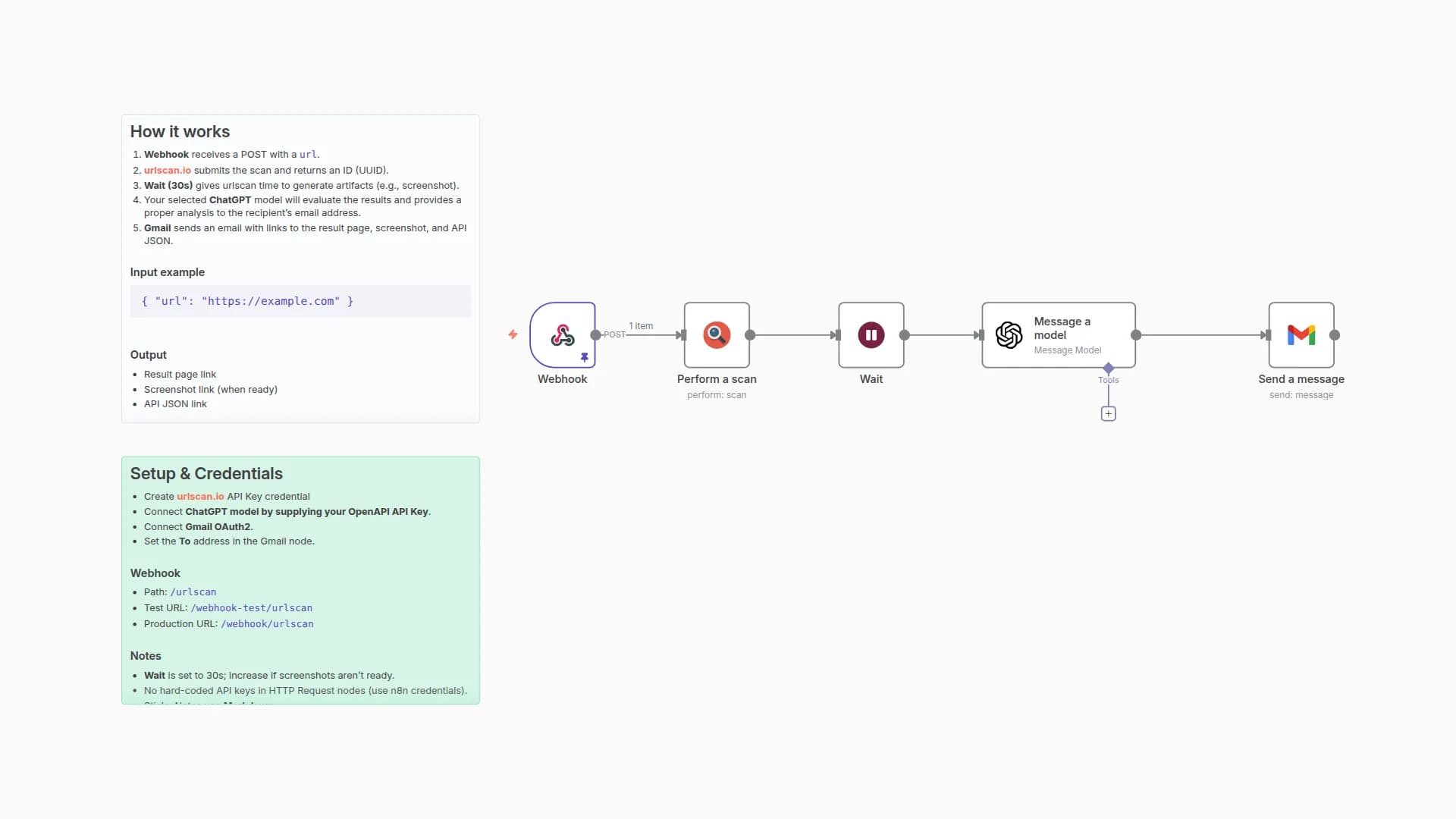

How it works

- Receives content via webhook endpoint

- Detects threats including prompt injections, XSS attempts, and data URI attacks

- Sanitizes markdown by removing HTML, dangerous protocols, and suspicious links

- Validates URLs blocking suspicious IP addresses, domains, and URL shorteners

- Returns security report with risk assessment and sanitized content

Setup

- Import and activate the workflow

- Use the generated webhook URL:

/webhook/security-sanitize - Send POST requests with

JSON: `{"content": "your_text", "source": "identifier"}`

Use cases

- Secure AI chatbots and LLM integrations

- Process user-generated content before AI processing

- Protect RAG systems from data poisoning

- Sanitize external webhook payloads

- Ensure compliance with security standards

Perfect for any organization using AI that needs to prevent prompt manipulation, data exfiltration, and injection attacks while maintaining audit trails for compliance.

You may also like

New to n8n?

Need help building new n8n workflows? Process automation for you or your company will save you time and money, and it's completely free!