RAG-Powered AI Voice Customer Support Agent (Supabase + Gemini + ElevenLabs)

Last edited 115 days ago

Execution video: Youtube Link

I built an AI voice-triggered RAG assistant where ElevenLabs’ conversational model acts as the front end and n8n handles the brain....here’s the real breakdown of what’s happening in that workflow:

-

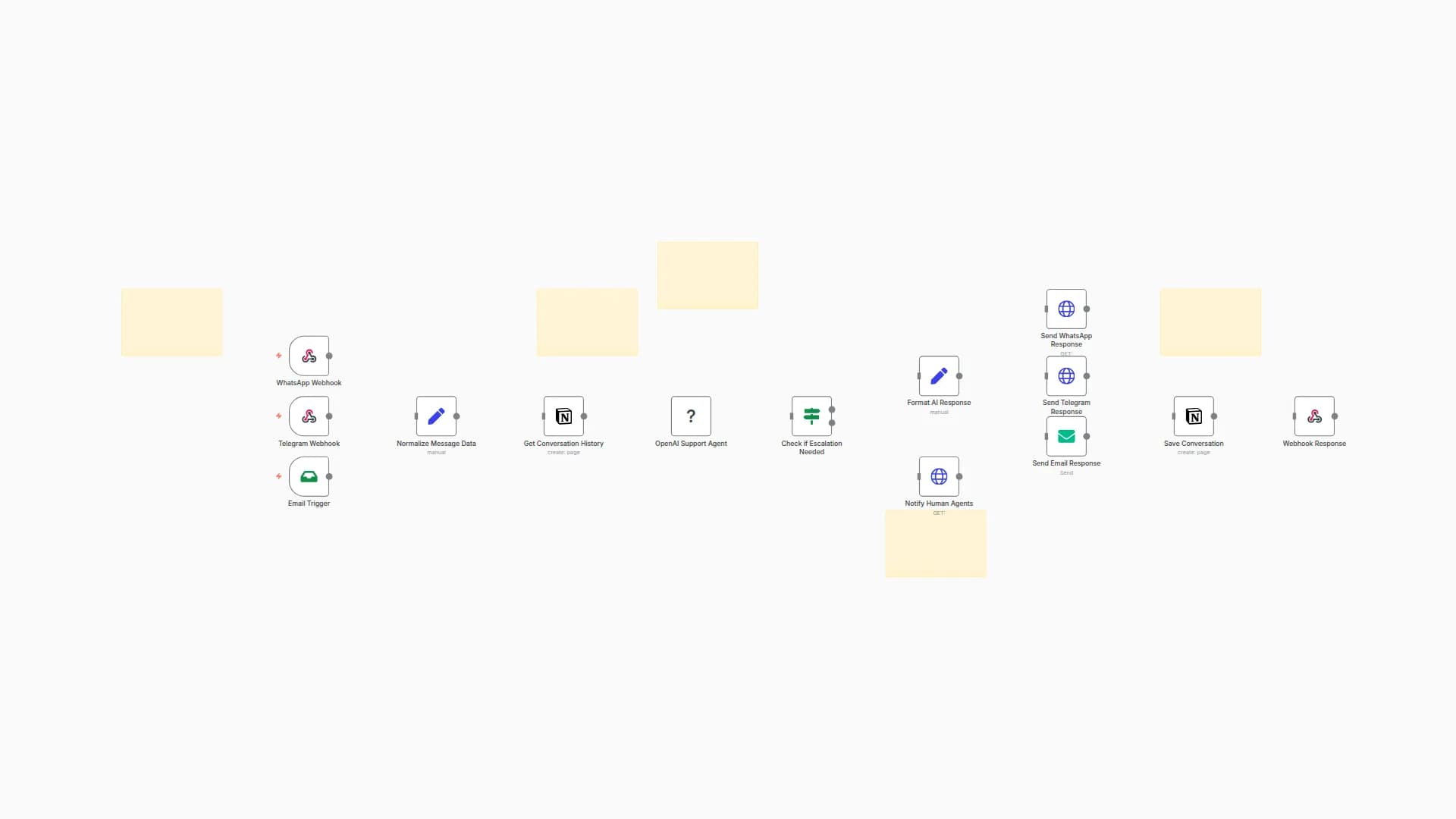

Webhook (

/inf)- Gets hit by ElevenLabs once the user finishes talking.

- Payload includes

user_question.

-

Embed User Message (Together API - BAAI/bge-large-en-v1.5)

- Turns the spoken question into a dense vector embedding.

- This embedding is the query representation for semantic search.

-

Search Embeddings (Supabase RPC)

- Calls

matchembeddings1to find the top 5 most relevant context chunks from your stored knowledge base.

- Calls

-

Aggregate

- Merges all retrieved

chunkvalues into one block of text so the LLM gets full context at once.

- Merges all retrieved

-

Basic LLM Chain (LangChain node)

- Prompt forces the model to only answer from the retrieved context and to sound human-like without saying “based on the context”....

- Uses Google Vertex Gemini 2.5 Flash as the actual model.

-

Respond to Webhook

- Sends the generated answer back instantly to the webhook call, so ElevenLabs can speak it back.

You essentially have:

Voice → Text → Embedding → Vector Search → Context Injection → LLM → Response → Voice

You may also like

New to n8n?

Need help building new n8n workflows? Process automation for you or your company will save you time and money, and it's completely free!