Evaluate Hybrid Search for Legal Question-Answering using Qdrant & BM25/mxbai

Last edited 115 days ago

Evaluate Hybrid Search on Legal Dataset

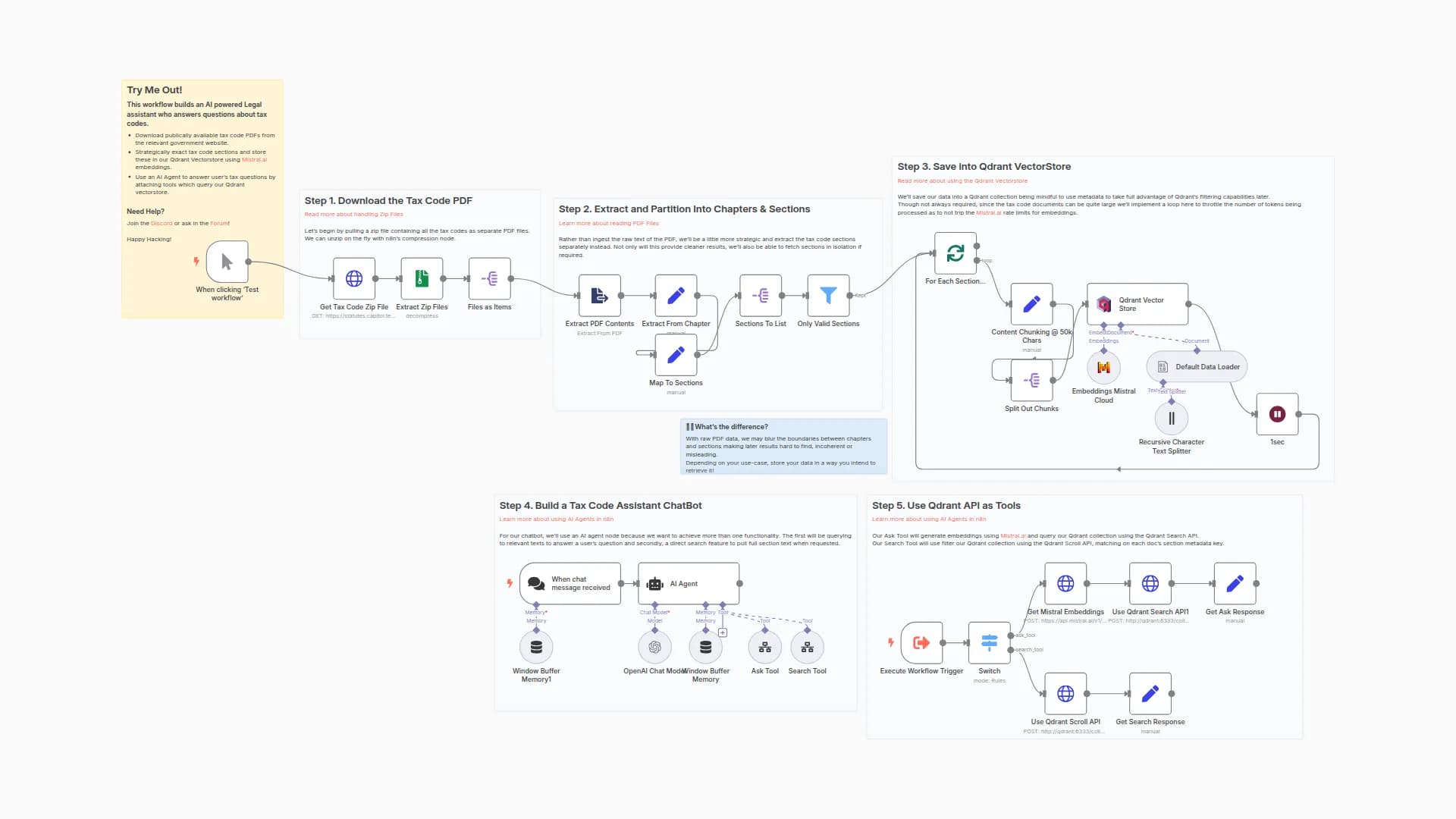

This is the second part of "Hybrid Search with Qdrant & n8n, Legal AI."

The first part, "Indexing", covers preparing and uploading the dataset to Qdrant.

Overview

This pipeline demonstrates how to perform Hybrid Search on a Qdrant collection using questions and text chunks (containing answers) from the

LegalQAEval dataset (isaacus).

On a small subset of questions, it shows:

- How to set up hybrid retrieval in Qdrant with:

- BM25-based keyword retrieval;

- mxbai-embed-large-v1 semantic retrieval;

- Reciprocal Rank Fusion (RRF), a simple zero-shot fusion of the two searches;

- How to run a basic evaluation:

- Calculate hits@1 — the percentage of evaluation questions where the top-1 retrieved text chunk contains the correct answer

After running this pipeline, you will have a quality estimate of a simple hybrid retrieval setup.

From there, you can reuse Qdrant’s Query Points node to build a legal RAG chatbot.

Embedding Inference

- By default, this pipeline uses Qdrant Cloud Inference to convert questions to embeddings.

- You can also use an external embedding provider (e.g. OpenAI).

- In that case, minimally update the pipeline, similar to the adjustments showed in Part 1: Indexing.

Prerequisites

- Completed Part 1 pipeline, "Hybrid Search with Qdrant & n8n, Legal AI: Indexing", and the collection created in it;

- All the requirements of Part 1 pipeline;

Hybrid Search

The example here is a basic hybrid query. You can extend/enhance it with:

- Reranking strategies;

- Different fusion techniques;

- Score boosting based on metadata;

- ...

More details: Hybrid Queries in Qdrant.

P.S.

- To ask retrieval in Qdrant-related questions, join the Qdrant Discord.

- Star Qdrant n8n community node repo <3

You may also like

New to n8n?

Need help building new n8n workflows? Process automation for you or your company will save you time and money, and it's completely free!